Unfortunately, I've decided against moving to a dedicated server just now.

After a little discussion with the rest of the team at Jambour Digital, we all decided it would be better to postpone the move for another few months.

However, by having a dedicated server setup, I realised how easily I could write a script to set up the new server, so that's exactly what I did. The script automates the whole process, to the point where it loads all of the SQL into the databases, sets up the needed applications and starts HTTPd.

To setup the new VPS, all I needed to do was run the script.

Moving away from the dedicated server is a sad moment for me, as I was so close to getting it perfect, but the fact that the connection is unreliable (due to not being a business broadband connection) I could not continue to use it.

A few months ago I had an idea that would improve start-up times for ZPE severalfold. I tried to implement it once before and it failed, resulting in me reverting back to a backup and leaving it alone for a while. Well, I have at last implemented this feature.

This feature makes the performance of ZPE better, the start-up times lower, the memory footprint lower and the CPU usage lower. Development is a little more complicated due to the use of reflection rather than using the object-based code that was used before but I know what I am doing with this. ZPE now has reached the 50,000 lines of code mark, even after the latest purge I carried out that took out over 20,000 lines of code.

The June release of ZPE will be available at the end of next week, with many minor improvements and additions. Today really does mark a monumental achievement.

Over the last five years of me being a teacher, I have developed quite a few pretty handy tools for teaching. Those tools have for the most part only been used by me.

Some of the tools, such as BalfVote are nearly available to the general public but others remain behind closed doors and only for me to use.

Well, today that is about to change with my plan to amalgamate these tools together. So far my teaching tools are as follows:

- BalfVote

- PlanIt

- Timetabler

- Teacher Organiser report writer

- Course planner generator

My vision is that these five tools merge into one collective group of tools with one central location to use them.

It was one year ago I was offered the amazing job I am in. Tuesday 24th of May 2022 was the day I got the job I always wanted to do!

I've wanted to do this for a long time - a dedicated server!

For the last five years, I've been running Jambour and all hosted websites using a VPS (virtual private server) with Digital Ocean - an absolutely marvellous company. Well, not any more! As of yesterday, most of my websites have been moved to my own dedicated server, sitting there in my office.

There are massive benefits to this.

Firstly, cost. It's actually much cheaper for me to run a dedicated server in my house than to rent a virtual part of a server. Our prices were going up so much that we started off paying £7 a month for our virtual private server in 2018 and by the end of 2022, we were paying over £26.

Second, upgradability is much easier, we can provision a second system quickly which can run an updated operating system and be spinning up in less than a second of downtime.

Thirdly, security. Unlike using Digital Ocean, the only way to connect to the server is from within my house, there is absolutely no other way to do this.

Fourthly, performance is much higher. A dedicated system can have whatever performance you want. We've got a 12-core system as opposed to a 4-virtual core system.

Fifthly, storage space. We have a whopping 256GB and 2TB of additional space available as opposed to 50GB.

Finally, ownership. I now physically own the server and can shut it down or turn it on whenever I need to.

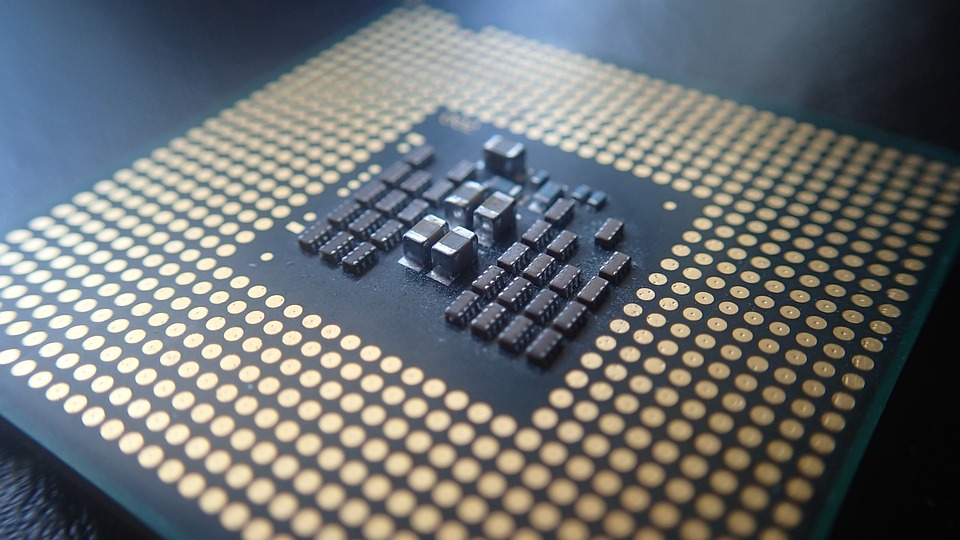

After 15 years of the Core i series Intel finally appears to be changing the branding they use for their products. In a Tweet by @Bernard_P, it was stated that Intel would be making changes to the branding of their CPUs.

Yes, we are making brand changes as we’re at an inflection point in our client roadmap in preparation for the upcoming launch of our #MeteorLake processors. We will provide more details regarding these exciting changes in the coming weeks! #Intel

— Bernard Fernandes (@Bernard_P) May 1, 2023

This will be the first time Intel has done this since the introduction of the Core i series in 2008.

To me, this personally seems like a waste of money and a careless thought towards their clientele. It's a radical move by Intel and it may backfire on them. We'll just have to wait and see.

I have spent a lot of my personal free time building a company which has achieved a lot, brings in a bit of revenue for myself and provides an excellent service for its clients. Still, I have decided that I cannot sustain the level of commitment I have provided over the last few years as the Executive Officer of the company.

Over the last few years, despite not actually being the person in charge of customer relations it has been me that has had to carry out most of the meetings and much more. In a small company with only three members, it may seem silly to delegate tasks like this but if we don't do this I have realised I end up doing everything which means that I cannot focus on teaching which is my primary career (and I work a lot whilst I am at home on my teaching content).

In July at our annual meeting I will step down as the Executive Officer and hand most of the work I do over to Mike who is currently in charge of Marketing. I will maintain the role of Technical Officer in order to continue utilising my server management and development skills to keep the company's infrastructure stable.

Moore's Law was kind of like the golden rule for computer systems. Developed by Intel cofounder Gordon Moore, the rule has been the centrepiece and fundamental principle behind the ever-improving computer systems we have today. It basically spelt out the future in that computers will get better performance year after year (doubling in performance, roughly).

It did this by not increasing the package size of the CPUs but by reducing the feature size. Take my EliteBook for example. It has a Ryzen 6850U processor, built with a 7-nanometer process. This 7-nanometer process is called fabrication, and it roughly means that the size of each transistor within a package (a CPU die) is around that size. The smaller and smaller the feature size the more and more likely it is for something to fail or go wrong during production, making it more and more difficult to manufacture CPUs as the feature sizes get smaller. Not only that, it has always been said that we will be unable to get the feature size smaller than the size of an atom (which is approximately 0.1 nanometers in size). The theoretical boundary for the smallest feature size that we can manufacture is approximately 0.5 nanometers - that's not that far away from where we are at 4 nanometers in 2023.

Over the last few years, manufacturers have been trying to squeeze every bit of performance increase out of the latest chips. Apple has been increasing the physical size of the processors by adding several chips together (think of the M1 Pro and Max which are simply M1 chips stuck together with a little bit of magic). This makes the processor very large and unsuitable for smaller devices. AMD, on the other hand, has moved to a design where wastage is less frequent thus increasing the yield of good processors and in turn allowing them to cram more performance into their dies.

To combat such an issue, the manufacturers of some CPUs moved to a chiplet design. This chiplet design is basically where several components such as the memory controller, IO controllers such as USB and so on compared with the original monolithic architecture used in the past. The first time I experienced a chiplet-based CPU was the Intel Core 2 Quad, where it was literally two Core 2 Duo dies on one chip. The issue is that it takes more space than building a chip using a monolithic architecture where the entire processor resides within one die. There are also other complications such as communication internally between these dies, but they can be easily overcome once it's been done with one chip, as the designs can continue to be used in other chips. There are also power consumption concerns when two CPU dies are put on the same chip, but with chiplets for IO and memory, this actually reduces the distance between the CPU and the chip, thus actually reducing power consumption and increasing performance. Chiplet design also keeps costs down as it reduces binning a good processor when a section of it fails. For example, if all IO was built into the actual CPU die (as is the case in a monolithic architecture) and only one part of the IO section failed, the whole processing unit would be binned. With a chiplet design, if there is a failure in the IO section, it happens only in that chiplet - a much less costly fix as all it would require is a replacement IO die.

Another way manufacturers are continuing to squeeze performance out of these chips is by embedding algorithms in them. This has always been a feature of CPUs since the Pentium MMX which brought several instruction sets to improve the multimedia capabilities of computers back then. It basically means that instead of a programmer writing an algorithm say to do the vector encoding using something like AVX-512, the CPU does not need the user to write the program. The program is actually an instruction built into the CPU and therefore it runs much faster. This is called hardware encoding. You'll see that Apple has done a lot of this in their M1 and M2 chips over the years to give them an even better performance result than the Intel CPUs they replaced. By doing this, Apple has managed to improve the effectiveness of its software through the use of hardware. This is actually something that could become a problem, however, and it might damage the cross-compatibility of software that can run on any operating system and any platform. I say this because if a piece of software is developed to use AVX-512 it's very likely that it will work on a system without AVX-512 instructions. But with an instruction being built-in such as one which utilises libraries specific to a GPU or CPU feature, cross-compatibility may not be possible without writing massive amounts of additional code (for example DirectX or Metal on macOS both cause issues when porting).

I have always believed since I was a teen that the second method is indeed the way forward, but it really would only work in a single CPU/GPU architecture world, a bit like if everyone was using x86. That's never going to happen, but perhaps if we had a library (Vulcan) that could abstract over those underlying APIs or hardware and make things simpler for developers, then maybe this is actually the best option.

After two release dates were cancelled, I'm finally able to release ZPE 1.11.4, aka OmegaZ, which will feature all of the previously discussed features such as union types and inline iteration etc. but will also include a major update to the record data type.

Previously, records looked like this:

record structure person { string forename = "" }

Now, with ZPE 1.11.4, they've been changed and the structure keyword is now optional. Speaking of syntactic sugar, there is now an optional is keyword:

record person { string forename = "" } record person is { string forename = "" }

As well as this, record instances use the new keyword.

$x = new person()

And fields of the record are accessed using dot notation:

$x.forename = "John" print($x.forename)

Whilst the title of this post is somewhat humourous, the topic of this post is far from that.

Recently several prominent YouTube channels such as Paul Hibbert and Linus Tech Tips have experienced account shutdowns and experiencing hacking of their channels.

Both channels experienced similar situations with the theft of a cookie being all that was needed to get into the website. And it makes sense too. In the past, I have used session IDs to switch between computers whilst keeping my session the same. So really all that is needed to get into a website without needing to authenticate is that cookie. Ultimately this is why I don't allow websites to do this on my server. However, it does still leave security issues with other websites.

What actually happens in these attacks is basically the user logs into the website as normal, and a cookie is transferred to the user's computer. The cookie is sent back to the web server each time the client requests something, identifying who they are. This session is stored on the server with the ID as defined in the cookie and contains information about who they are - it's fairly simple. But if a hacker obtains this ID, they can put it into their own browsers and they too can pretend to be logged in as the user.